The AI FACTOR Framework: Driving Strategic Impact

Artificial Intelligence (AI) is now embedded in nearly every enterprise platform, product, and process. From predictive analytics in finance to generative assistants in daily operations, AI is often marketed as a game-changer. But in the rush to adopt these tools, one important question is often overlooked: Is the AI actually delivering business value? This is a concern we hear regularly from investors analysing portfolios, customers evaluating vendors, and technology leaders reviewing internal performance. Too often, AI is added because it’s trendy, not because it solves a real problem. To evaluate whether an AI solution is actually working not just technically functional but strategically impactful, we recommend using the A.I. F.A.C.T.O.R. Framework. It is a practical way for business leaders to assess Enterprise AI solutions for business impact across key areas that matter for enterprise success. The A.I. F.A.C.T.O.R. Framework This framework is built on eight core evaluation criteria. Together, they help determine whether Enterprise AI solutions are improving your business or simply adding noise. A – Alignment Is the AI clearly tied to a business goal or customer need? The best AI initiatives are the ones that serve a measurable purpose. Whether it’s streamlining a process, boosting productivity, or improving the customer journey, the technology should be connected directly to outcomes that matter. If the AI isn’t solving a clearly defined problem, it is just another line item in your tech stack. I – Intelligence Is the solution truly intelligent or just automated? There’s a big difference between rule-based automation and machine learning. Real AI should demonstrate pattern recognition, adaptive learning, and contextual awareness. If it is just mimicking scripts or following predefined workflows, it is not AI in the sense that drives competitive advantage. F – Fit How well does the AI fit into existing systems and workflows? Enterprise adoption doesn’t work if it disrupts core operations. Good AI integrates cleanly with your CRM, ERP, cloud environment, or custom apps. It should enhance how people already work, not force teams to learn something completely new. A – Accuracy Are the AI outputs consistently reliable and actionable? From forecasts to recommendations, results must be precise enough to support decision-making. Inaccurate outputs drain time, damage trust, and can create downstream risks, especially in sectors like finance, healthcare, or logistics. Every AI rollout should include performance benchmarks and regular validation. C – Cost-Benefit Does the business value exceed the total cost of ownership? This includes more than licensing and implementation. Think about training, cloud compute, model monitoring, data governance, and opportunity cost. If the AI doesn’t create time savings, increase revenue, or improve margins, it is not worth the investment, especially at enterprise scale. T – Transparency Can users and stakeholders understand how the AI works? With growing regulatory expectations across the U.S. and globally, black-box systems are a red flag. Teams should be able to explain the logic behind recommendations, understand risks, and audit outcomes. This matters for compliance and for user trust. O – Optimization Is the AI improving over time through learning and feedback? Static models are a missed opportunity. An effective AI application should adjust to new data, user inputs, and business trends. Optimization is the foundation of long-term value, especially in fast-changing environments. R – Responsiveness Can the AI adjust to new situations, edge cases, or changing conditions? The business world doesn’t sit still. Whether it’s a change in customer behavior, a supply chain issue, or a new compliance rule, your AI tools need to keep up. Responsiveness helps teams avoid service delays, inaccurate insights, or manual firefighting. Why This Matters for Business Leaders Enterprises are investing heavily in AI, often without a clear structure to measure ROI. That is risky. You need to know not just whether your AI is functioning, but whether it is moving your business forward. The A.I. F.A.C.T.O.R. Framework helps: Audit internal projects or pilot programs Vet AI vendors during RFP processes Align product and IT teams with leadership goals Improve how you report AI progress to the board It is a tool for strategic alignment not just technical review and is especially useful when evaluating Enterprise AI solutions for business impact. How to Apply This in Your Organization If you’re currently using AI, use this framework to assess performance. If you’re planning to roll out a new solution, use it as a pre-check before committing. Ask your team: Where is our AI aligned with business objectives? Are we tracking model accuracy and financial impact? How well does it integrate with our current tools? What is the plan for model optimization and oversight? Even if you’re only in the planning phase, having this framework in place will make future deployments smoother, faster, and more effective. Final Thought: AI That Works Means AI That Delivers AI doesn’t need to be perfect, but it must be productive. Business leaders need more than impressive demos. They need measurable outcomes. The A.I. F.A.C.T.O.R. Framework keeps teams focused on what matters real intelligence, real value, and real results. This is especially true when choosing Enterprise AI solutions for business impact that Ignitho delivers with a focus on outcomes, not just algorithms.

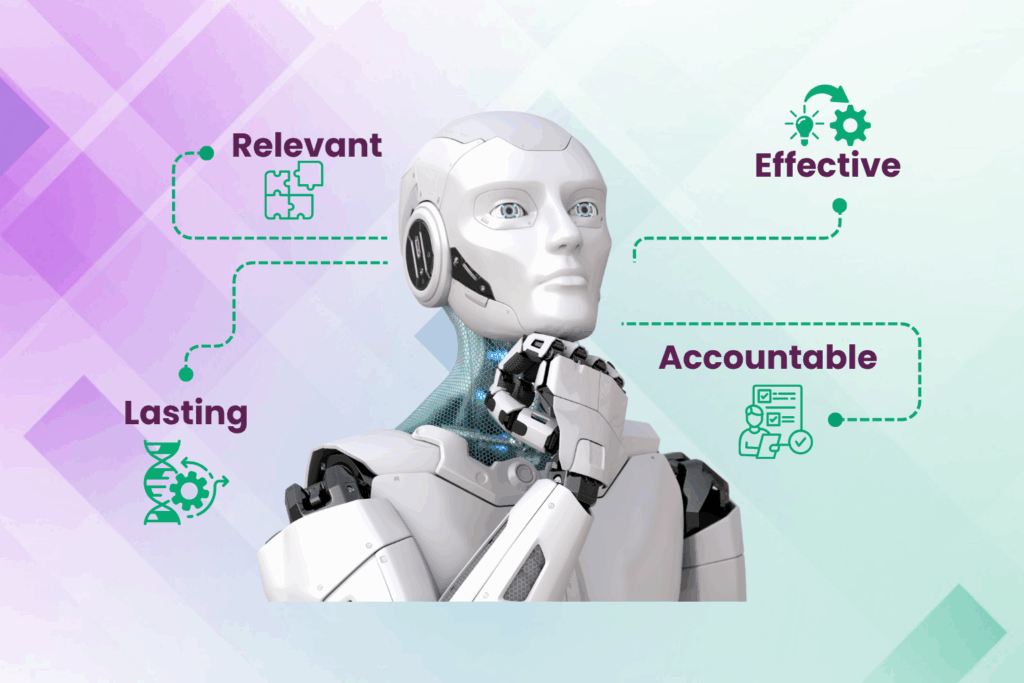

Beyond the Hype: Delivering R.E.A.L. AI Impact with Ignitho’s Foundational Framework

In today’s fast-paced market, many companies appear to be diving into AI out of FOMO (fear of missing out), without a clear understanding of the tangible outcomes it can truly deliver. While it may be tempting to jump on the trend to keep up with the market, Ignitho’s experience with customers has shown that true success lies not in blindly following, but in staying ahead with intention. Everything begins with mastering the fundamentals, clearly understanding what is truly needed, and focusing on solutions that provide real, measurable value towards business growth. At Ignitho, we therefore believe that real AI impact comes from strategically applying AI technologies to solve urgent business problems and deliver measurable outcomes. To cut through the buzz and ensure AI investments actually work, we rely on a simple yet powerful lens: the R.E.A.L. AI Checklist. The R.E.A.L. AI Checklist: Ignitho’s Framework for Real Impact Ignitho uses this practical framework to help our customers assess if an AI implementation is truly Relevant, Effective, Accountable, and Lasting. R – Relevant: Is the AI solving a real, urgent problem for the business? Real AI starts with real needs. If you can’t clearly articulate the problem the AI is addressing, whether it’s reducing churn, predicting demand, or personalizing offers, then you’re investing in fluff. AI that’s not tied to outcomes is AI that’s bound to fail. At Ignitho, we focus on solving business-critical challenges, leveraging AI where it truly matters to drive measurable outcomes. E – Effective: Is the AI being implemented with the right data, people, and processes? AI is only as good as its execution. Many initiatives stall not because the technology is weak, but because teams lack clean data, skilled staff, or governance. Effective execution also means continuous enhancement, testing and iteration, not a one-time deployment. Ignitho’s Frugal Innovation methodology ensures we deliver AI solutions that are practical, cost-effective, and designed to integrate seamlessly with existing systems, avoiding the common pitfalls of poor execution. A – Accountable: Who owns the outcome and is it measurable? If no one is accountable for results, AI becomes an ornamental feature. Define KPIs. Assign owners. Track results. AI must be treated like any other business investment with clear expectations, oversight, and ROI. At Ignitho, we align AI initiatives closely with business goals and ensure accountability at every stage to deliver tangible business value. L – Lasting: Can the AI evolve with the business, data, and user behaviour? The best AI learns and adapts. Static models become outdated fast. If your AI isn’t improving through feedback loops or retraining, it will eventually become irrelevant. Longevity is what separates short-term hype from long-term impact. Ignitho’s approach emphasizes building AI solutions that scale and evolve, ensuring they stay aligned with dynamic business needs and continue to drive value over time. Ignitho’s Real-World AI Impact Our dedication to the R.E.A.L. AI checklist is powerfully demonstrated through the concrete results we’ve achieved for our clients: Data Accelerators for Magic ETL: Ignitho has enabled multiple enterprises to dramatically reduce the time spent on data quality engineering—from weeks to minutes—through automation and AI-driven validation. Ignitho’s accelerators leverage LLMs, RAGs, and AI agents to minimize pipeline rebuilding, saving data engineers significant time on ETL processes. This directly addresses the high costs and manual effort associated with traditional ETL pipelines when data sources change frequently. Our solutions also ensure conformance with stringent infrastructure compliance standards, maintaining security and reliability across data operations. AI Agents for Quality Acceleration: For various enterprises, Ignitho has deployed agents that uses advanced AI to automate the generation of test cases from user stories. It also works on pre-existing test case repositories to gather functional and technical knowledge, empowering Quality Engineers to build robust, error-free test automation quickly . With capabilities to automate QE code generation, this leads to automating quality engineering automation to a greater extent. This innovation addresses the critical need for automatic test case generation and automation, especially given the absence of a holistic solution in the market, leading to 70% automation of test case creation. Advanced AI Agents for Streamlined Insurance Underwriting: For a multi-billion-dollar business insurance enterprise, Ignitho built a solution that implemented advanced technologies like LLMs, RAGs, and Generative AI to support the underwriter. Scores of pages were auto processed and presented for decision-making, reducing decision time from 8 hours to just 15 minutes. Explainable AI principles supported the underwriters and allowed them to ask more detailed questions to arrive at decisions, directly solving the challenge of underwriters spending multiple hours on evaluation and review, which led to quality issues and revenue leakage. Why the R.E.A.L. AI Checklist Matters More Than Ever The cost of chasing AI hype without results is steep: lost time, wasted budget, and damaged trust. Boards are asking tougher questions. Regulators are watching. Employees are skeptical. And AI users? They just want things to work better. R.E.A.L. AI should: Improve decisions or efficiency Enhance customer or employee experiences Generate measurable business outcomes Reduce cost or risk If it’s not doing one of these, it’s not worth doing. What You Should Do Now Whether you’re just starting with AI or scaling enterprise-wide, ask these questions: What real-world problem is this AI solving? How will we measure success? Who is responsible for tracking and improving it? Will this AI continue to be relevant a year from now? In a world where every tool calls itself AI, the winners will be those who use it intentionally. AI should not be a shiny badge on your tech stack, it should be a business accelerator. When done right, it’s not just artificial intelligence, it’s actual impact. At Ignitho, we help organizations move beyond the buzzwords, focusing on building solutions that solve real problems and create real value. Let’s stop chasing hype and start delivering outcomes that matter.

Exploring NoSQL: To Mongo(DB) or Not?

While building enterprise systems, choosing between SQL and NoSQL databases is a pivotal decision for architects and product owners. It affects the overall application architecture and data flow, and also how we conceptually view and process various entities in our business processes. Today, we’ll delve into MongoDB, a prominent NoSQL database, and discuss what it is, and when it can be a good choice for your data storage needs. What is MongoDB? At its core, MongoDB represents a shift from the conventional relational databases. Unlike SQL databases, which rely on structured tables and predefined schemas, MongoDB operates as a document-oriented database. As a result, instead of writing SQL to access data, you use a different query language (hence NoSQL). In MongoDB, data is stored as BSON (Binary JSON) documents, offering a lot of flexibility in data representation. Each of the documents can have different structures. This flexibility is particularly beneficial when dealing with data of varying structures, such as unstructured or semi-structured data. Consider a simple example of employee records. In a traditional SQL database, you would define a fixed schema with predefined columns for employee name, ID, department, and so on. Making changes to this structure is not trivial, especially if you have lots of volume, traffic and lots of indexes. However, in MongoDB, each employee record can be a unique document with only the attributes that are relevant to that employee. This dynamic schema allows you to adapt to changing data requirements without extensive schema modifications. How is Data Stored? MongoDB’s storage model is centered around key-value pairs with BSON documents. This design choice simplifies data retrieval, as each piece of information is accessible through a designated key. Let’s take the example of an employee record stored as a BSON document: { “_id”: ObjectId(“123”), “firstName”: “John”, “lastName”: “Doe”, “department”: “HR”, “salary”: 75000, “address”: { “street”: “123 Liberty Street”, “city”: “Freedom Town”, “state”: “TX”, “zipCode”: “12345” } } In this example, “_id” is the unique identifier for the document. If we specify the key or id, then MongoDB can quickly retrieve the relevant document object. Accessing any attribute is also straightforward. For instance, to retrieve the employee’s last name, you use the key “lastName.” MongoDB’s ability to store complex data structures, such as embedded documents (like the address in our example), contributes to its flexibility. MongoDB further enhances data organization by allowing documents to be grouped into collections. Collections serve as containers for related documents, even if those documents have different structures. For example, you can have collections for employees, departments, and projects, each containing documents with attributes specific to their domain. Query Language In any database, querying data efficiently is essential for maintaining performance, especially as the data volume grows. MongoDB provides a powerful query language that enables developers to search and retrieve data with precision. Queries are constructed using operators, making it easy to filter and manipulate data. Here’s a simple example of querying a MongoDB database to find all employees in the HR department earning more than $60,000: db.employees.aggregate([ { $match: { department: “HR”, salary: { $gt: 60000 } } } ]) The “$match” stage filters employees in the HR department with a salary greater than $60,000. MongoDB’s query language provides the flexibility to construct sophisticated queries to meet specific data retrieval needs. One way to do that is to use aggregation pipelines. These enable you to do complex data transformations and analysis within the database itself. Pipelines basically consist of a sequence of stages, each of which processes and transforms the documents as they pass through. We saw the $match stage in the example above. There are other stages such as $group which allow us to group the results as needed. For example, to group all employees by their average salary by department if the salary is greater than $60,000, we can use a pipeline like this: db.employees.aggregate([ { $match: { salary: { $gt: 60000 } // Filter employees with a salary greater than $60,000 } }, { $group: { _id: “$department”, // Group by the “department” field avgSalary: { $avg: “$salary” } // Calculate the average salary within each group } } ]) Finally, while BSON documents, which store data in a binary JSON-like format, may not have predefined indexes like traditional SQL databases, MongoDB provides mechanisms for efficient data retrieval. MongoDB allows you to create indexes on specific fields within a collection to improve query performance. These indexes act as guides for MongoDB to quickly locate documents that match query criteria. In our example, to optimize the query for employees in the HR department, you can create an index on the “department” and “salary” fields. This index will significantly speed up queries that involve filtering by department and salary. With the appropriate indexes in place, MongoDB efficiently retrieves the matching documents. Without an index, MongoDB would perform a full collection scan, which can be slow and resource-intensive for large datasets. It’s important to note that indexes have trade-offs. While they enhance query performance, they also require storage space and can slow down write operations (inserts, updates, deletes) as MongoDB must maintain the index when data changes. Therefore, during database design, it is important to look at the applications needs and strike a balance between query performance and index management. Performance Scalability MongoDB’s scalability feature also sets it apart from traditional SQL databases. Since it stores document objects instead of relational rows, it can offer both vertical and horizontal scalability, allowing you to adapt to changing workloads and data volume. Vertical scaling involves adding more resources (CPU, RAM, storage) to a single server, effectively increasing its capacity. This approach suits scenarios where performance can be improved by upgrading hardware. This is the typical method used to upgrade traditional RDBMS systems. In contrast, horizontal scaling involves distributing data across multiple servers or nodes,

Improving React Performance: Best Practices for Optimization

MERN—the magical acronym that encapsulates the power of MongoDB, Express.js, React, and Node.js—beckons full-stack developers into its realm. Our focus for this blog will be on React, the JavaScript library that’s revolutionized user interfaces on the web. What is React? React is a JavaScript library for creating dynamic and interactive user interfaces. Since its inception, react has gained immense popularity and has become one of the leading choices among developers for building user interfaces. It has become the go-to library for building user interfaces, thanks to its simplicity and flexibility. However, as applications grow in complexity, React’s performance can become a concern. Slow-loading pages and unresponsive user interfaces can lead to poor user experience. Fortunately, there are several best practices and optimization techniques that can help you improve React performance. Ignitho has been developing enterprise full stack apps using react for the last many years. We also recently had a tech talk on “Introduction to React”. In this blog post, which is the first part of MERN, we will be discussing React and explore some of the strategies to ensure your React applications run smoothly. Use React’s Built-in Performance Tools React provides a set of built-in tools that can help you identify and resolve performance bottlenecks in your application. The React Dev Tools extension for browsers, for instance, allows you to inspect the component hierarchy, track component updates, and analyze render times. By using these tools, you can gain valuable insights into your application’s performance and make targeted optimizations. Functional Components & Component Interaction The subtle way of optimizing performance of React applications is by using functional components. Though it sounds cliche, it is the most straightforward and proven tactic to build efficient and performant React applications speedily. Experienced React developers suggest keeping your components small because smaller components are easier to read, test, maintain, and reuse. Some advantages of using React components are: Makes code more readable Easy to test Yields better performance Debugging is a piece of cake, and Reducing Coupling Factor Optimize Rendering with Pure Component and React.memo React offers two ways to optimize rendering: Pure Component and React.memo. Pure Component: This is a class component that automatically implements the should Component Update method by performing a shallow comparison of props and state. Use it when you want to prevent unnecessary renders in class components. class MyComponent extends React.PureComponent { // … } React.memo: This is a higher-order component for functional components that memoizes the component’s output based on its props. It can significantly reduce re-renders when used correctly. const MyComponent = React.memo(function MyComponent(props) { // … }); By using these optimizations, you can prevent unnecessary renders and improve your application’s performance. Memoize Expensive Computations Avoid recalculating values or making expensive computations within render methods. Instead, memoize these values using tools like useMemo or useSelector (in the case of Redux) to prevent unnecessary work during renders. const memoizedValue = useMemo(() => computeExpensiveValue(dep1, dep2), [dep1, dep2]); Avoid Reconciliation Pitfalls React’s reconciliation algorithm is efficient, but it can still lead to performance issues if not used wisely. Avoid using array indices as keys for your components, as this can cause unnecessary re-renders when items are added or removed from the array. Instead, use stable unique identifiers as keys. {items.map((item) => ( <MyComponent key={item.id} item={item} /> ))} Additionally, be cautious when using setState in a loop, as it can trigger multiple renders. To batch updates, you can use the functional form of setState. this.setState((prevState) => ({ count: prevState.count + 1, })); Lazy Load Components If your application contains large components that are not immediately visible to the user, consider lazy loading them. React’s React.lazy() and Suspense features allow you to load components asynchronously when they are needed. This can significantly improve the initial load time of your application. const LazyComponent = React.lazy(() => import(‘./LazyComponent’)); function MyComponent() { return ( <div> <Suspense fallback={<LoadingSpinner />}> <LazyComponent /> </Suspense> </div> ); } Profile and Optimize Components React provides a built-in profiler that allows you to analyze the performance of individual components. By using the React.profiler API, you can identify components that are causing performance bottlenecks and optimize them accordingly. import { unstable_trace as trace } from ‘scheduler/tracing’; function MyComponent() { trace(‘MyComponent render’); // … } Bundle Splitting If your React application is large, consider using code splitting to break it into smaller, more manageable chunks. Tools like Webpack can help you achieve this by generating separate bundles for different parts of your application. This allows for faster initial load times, as users only download the code they need. Use PureComponent for Lists When rendering lists of items, use React.PureComponent or React.memo for list items to prevent unnecessary re-renders of list items that haven’t changed. function MyList({ items }) { return ( <ul> {items.map(item => ( <MyListItem key={item.id} item={item} /> ))} </ul> ); } const MyListItem = React.memo(function MyListItem({ item }) { // … }); Optimize Network Requests Efficiently handling network requests can have a significant impact on your application’s performance. Use techniques like caching, request deduplication, and lazy loading of data to minimize the network overhead. Regularly Update Dependencies Make sure to keep your React and related libraries up to date. New releases often come with performance improvements and bug fixes that can benefit your application. Trim JavaScript Bundles If you wish to eliminate code redundancy, learn to trim your JavaScript packages. When you cut-off duplicates and unnecessary code, the possibility of your React app performance multiplies. You must analyze and determine bundled code. Server-Side Rendering (SSR) NextJS is the best among the available SSR. It is getting popular amongst developers & so is the usage of the NextJS-based React Admin Dashboard. NextJS integrated React admin templates can help you boost the development process with ease. In conclusion, improving React performance is essential for delivering a smooth user experience. By following these best practices and optimization techniques, you can ensure that your React applications remain fast and responsive, even as they grow in complexity. Remember

The Changing Global Delivery Model for AI Led Digital Engineering

As the technological and cultural landscape undergoes tectonic shifts with the advent of AI in a post-pandemic world, businesses are striving to stay ahead of the curve. At Ignitho, we are trying to do the same – not just keep pace but shape the future through our global delivery model augmented by our AI center of Excellence (CoE) in Richmond, VA. This CoE model, firmly anchored in the “jobs to be done” concept, reflects Ignitho’s commitment to creating value, fostering innovation, and staying ahead of emerging trends. Let’s examine three key reasons why Ignitho’s approach to creating this upgraded global delivery model is a game-changer. Embracing the AI Revolution The rise of artificial intelligence (AI) is redefining industries across the board, and Ignitho recognizes the pivotal role that data strategy plays in this transformation. Rather than simply creating conventional offices staffed with personnel, Ignitho’s global delivery model focuses on establishing centers of excellence designed to cater to specific functions, be it data analytics, AI development, or other specialized domains. Our AI Center of Excellence in Richmond, VA promises to become that source of specialized application. By actively engaging with our clients, we have gained invaluable insights into what they truly require in this ever-changing technological landscape. Our global delivery approach goes beyond simply delivering on predefined roadmaps. Instead, it involves close collaboration to navigate the intricate web of shifting data strategies and AI adoption. So, in a world where data is the new currency and AI is transforming industries, the process of crafting effective solutions is no longer a linear journey. Ignitho’s Centers of Excellence in the US plans to serve as a collaborative hub where our experts work closely with clients to make sense of the dynamic data landscape and shape AI adoption strategies. Then the traditional global delivery model takes to do what that model is good at. This approach to AI is not just about creating models, churning out reports, or ingesting data to various databases; it’s about crafting and delivering a roadmap that aligns seamlessly with the evolving needs of the clients. Reshaping Digital Application Portfolios The second pillar of Ignitho’s global delivery model revolves around reshaping digital application portfolios. Traditional software development approaches are undergoing a significant shift, thanks to the advent of low-code platforms, the need for closed-loop AI models, and the need to adopt the insights in real time. So as with the AI programs, Ignitho’s model allows us to engage effectively on the top-down architecture definition in close collaboration with clients, delivering a roadmap that then subsequently leads to the conventional delivery model of building and deploying the software as needed. The different global teams employ similar fundamentals and training in low-code and AI led digital engineering, but the global team is also equipped to rapidly develop and deploy the applications in a distributed Agile model as needed. By adopting such an approach, Ignitho ensures that the right solutions are developed, and the clients’ goals are more effectively met. Shifting Cultural Paradigms As the global workforce evolves, there’s a notable shift in cultural patterns. People are increasingly valuing outcomes and results over sheer effort expended. Networking and collaboration are also no longer limited to narrow physical boundaries. Ignitho’s global delivery model aligns seamlessly with this cultural transformation by focusing on creating value-driven centers of excellence. As a result, by delivering tangible and distinct value at each of the centers, Ignitho epitomizes the shift from measuring productivity by hours worked to gauging success by the impact created. What’s Next? Ignitho’s upgraded Center of Excellence based global delivery model is better suited to tackle the challenges and opportunities posed by AI, new ways of digital engineering, and evolving cultural norms where success is taking on new meanings. So, as the digital landscape continues to evolve, businesses that embrace Ignitho’s approach stand to gain a competitive edge. The synergy between specialized centers, data-driven strategies, and outcome-oriented cultures will enable us to provide solutions that resonate with the evolving needs of clients across industries. As a result, we are not just adapting to change but we are driving change.

C-Suite Analytics in Healthcare: Embracing AI

C-suite healthcare analytics has become more crucial than ever in today’s rapidly evolving healthcare landscape characterized by mergers, private equity investments, and dynamic regulatory and technological changes. To create real-time, actionable reports that are infused with the right AI insights, we must harness and analyze data from various sources, including finance, marketing, procurement, inventory, patient experience, and contact centers. However, this process often consumes significant time and effort. In addition, maintaining a robust AI strategy in such a dynamic landscape is no easy task. In order to overcome these challenges, healthcare leaders must embrace comprehensive business intelligence and AI-powered solutions that provide meaningful dashboards for different stakeholders, streamline data integration, and enable AI driven predictive analytics. In this blog we will: Highlight key challenges Present a solution framework to addresses these critical issues Key Industry Challenges Not only do healthcare organizations face internal challenges such as harnessing data from various sources but they also encounter industry dynamics of M&A. To create actionable reports when needed for board level reporting and operational control, the various sources of data that must be integrated in such as environment is daunting – finance, marketing, procurement, inventory, patient experience, and contact centers, and so on. Dynamic M&A Landscape The healthcare industry is experiencing a constant wave of mergers and acquisitions, leading to an increasingly complex data and technology landscape. When organizations merge or acquire new entities, they inherit disparate data systems, processes, and technologies. Integrating these diverse data sources becomes a significant challenge, impeding timely and accurate reporting. Consider a scenario where a healthcare provider acquires multiple clinics of various sizes. Each entity may have its own electronic health record (EHR) system, financial software, and operational processes. Consolidating data from these disparate systems into a unified view becomes a complex task. Extracting meaningful insights from the combined data requires specialized integration efforts Data Fragmentation and Manual Effort Healthcare organizations operate in a complex ecosystem, resulting in data fragmentation across different departments and systems. Extracting, aggregating, and harmonizing data from diverse sources can be a laborious and time-consuming task. As a result, generating up-to-date reports that provide valuable insights becomes challenging. Example: Pulling data from finance, marketing, and patient experience departments may involve exporting data from multiple software systems, consolidating spreadsheets, and manually integrating the information. This manual effort can take days or even weeks, leading to delays in obtaining actionable insights. Need for Predictive Analytics To navigate the changing healthcare landscape effectively, organizations require the ability to make informed decisions based on accurate predictions and what-if analysis. Traditional reporting methods fall short in providing proactive insights for strategic decision-making. Example: Predicting future patient demand, identifying supply chain bottlenecks, or optimizing resource allocation requires advanced analytics capabilities that go beyond historical data analysis. By leveraging AI, healthcare leaders can gain foresight into trends, mitigate risks, and drive proactive decision-making. How to address these Challenges? To address these challenges, we need a top-down solution (AI driven CDP accelerator for healthcare) that has strategically been designed to address them. Trying to tackle the integrations, reports, and insights needed on a bespoke basis every time a new need arises will not be a scalable solution. Some of the key features are below of such an integrated C-suite analytics solution that combines data from multiple sources and leverages AI capabilities. This solution should possess the following features: Meaningful Predefined Dashboards The analytics platform should provide intuitive and customizable dashboards that present relevant insights in a visually appealing manner. This empowers C-suite executives to quickly grasp the key performance indicators (KPIs) that drive their decision-making processes. These dashboards should address the relevant KPIs for the various audiences such as the board, c-suite, operations, and providers. Example: A consolidated dashboard could showcase critical metrics such as financial performance, patient satisfaction scores, inventory levels, and marketing campaign effectiveness. Executives can gain a comprehensive overview of the organization’s performance and identify areas requiring attention or improvement. AI-Powered Consumption of Insights As recent developments have shown us, AI technologies can play a vital role in managing the complexity of data analysis. The analytics solution should incorporate AI-driven capabilities, such as natural language processing and machine learning, to automate insights consumption, anomaly detection, and trends tracking. Example: By leveraging a simple AI based chatbot, the analytics platform can reduce costs by automating the reports generation. It can also help users easily identify outliers and trends, and provide insights into data lineage, allowing organizations to trace the origin and transformation of data across merged entities. Seamless Data Integration The analytics solution should offer seamless integration with various systems, eliminating the need for extensive manual effort. It should connect to finance, marketing, procurement, inventory, patient experience, contact center, and other relevant platforms, ensuring real-time data availability. Example: By integrating with existing systems, the analytics platform can automatically pull data from different departments, eliminating the need for manual data extraction and aggregation. This ensures that reports are current and accurate, allowing executives to make data-driven decisions promptly. AI-Driven Predictive Analytics Utilizing AI algorithms, the analytics solution should enable predictive analytics, allowing healthcare leaders to identify trends, perform what-if analysis, and make informed strategic choices. Example: By analyzing historical data and incorporating external factors, such as demographic changes or shifts in healthcare policies, the AI-powered platform can forecast patient demand, predict inventory requirements, and simulate various scenarios for optimal decision-making. Provide a Path for Data Harmonization and Standardization In addition to the challenge of integrating different data systems, organizations face the hurdle of harmonizing and standardizing data across merged entities. Varying data formats, coding conventions, and terminology can hinder accurate analysis and reporting. Example: When merging two providers, differences in how patient demographics are recorded, coding practices for diagnoses and procedures, and variations in medical terminologies can create data inconsistencies. Harmonizing these diverse datasets requires significant effort, including data cleansing, mapping, and standardization procedures. Next steps In an era of rapid change and increasing complexity,

Transformative Role of AI in Custom Software Development

Welcome to the world of AI in custom software development. In this blog post, we will get into the impact of AI on custom software development in the enterprise. The emergence of artificial intelligence promises to revolutionize how we create applications and the larger business technology ecosystems. While AI brings the benefits of automated code generation and improved code quality, it is important to understand that there is still a critical place for human expertise in defining the application structure and overall enterprise tech architecture. Streamlining the Development Workflow First, let’s explore how AI can enhance the development process. This will: Create significant savings in mundane software development tasks. Empower developers to be more productive. It is common in every application development scenario where developers spend a significant amount of their time writing repetitive lines of code that perform similar tasks. We often call this software code as boilerplate code. These tasks could involve tasks like authentication, data validation, input sanitization, or even generating code for common functionalities such as calling APIs and so on. These tasks, although necessary, can be time-consuming and monotonous, preventing developers from dedicating their efforts to more critical aspects of the development process. Even today, accelerators like Intelligent Quality Accelerator (IQA), Intelligent Data Accelerator (IDA) and also shortcuts exist to generate all this automatically for developers. However, with the advent of AI-driven tools and frameworks, this scenario can be enhanced much further. The code generation is now context aware instead of just being code that needs to be customized. This will provide developers with a significant productivity boost. For example, let’s consider a developer who needs to implement a form validation feature in their application. Traditionally, they would have to write multiple lines of code to validate each input field, check for data types, and ensure data integrity. With AI-powered code generation, developers can specify their requirements, and the AI tool can automatically generate the necessary code snippets, tailored to their specific needs. This automation not only saves time and effort but also minimizes the chances of introducing errors. Thus, by leveraging AI algorithms, developers can streamline their workflow, increase efficiency, and devote more time to higher-level design and problem-solving. Instead of being bogged down by repetitive coding tasks, they can focus on crafting innovative solutions, creating seamless user experiences, and tackling complex challenges. The Importance of Human Expertise While AI excels at code generation, it is important to acknowledge that the structure of an application goes beyond the lines of code. Human expertise plays a key role in defining the overall structure, ensuring that it aligns with the intended functionality, architecture, and user experience. Consider a scenario where an organization wants to develop an application that processes customer returns. The application needs to have modules for managing customer information, tracking interactions, looking up merchandise and vendor specific rules, and generating reports. AI can assist in generating the code for these individual smaller modules based on predefined patterns and best practices. However, it is the human experts who possess the domain knowledge and understanding of the business requirements to determine how these modules should be structured and interact with each other to deliver the desired functionality seamlessly. Software architects or senior developers collaborate with stakeholders to analyze the business processes and define the architectural blueprint of the application. They consider factors like scalability, performance, security, and integration with existing systems. By leveraging their expertise, they ensure that the application is robust, extensible, and aligned with the organization’s long-term objectives. Since developing a software application often involves integrating it within an existing tech ecosystem and aligning it with the organization’s overall technology architecture, human input plays a critical role. Let’s consider another scenario where an organization plans to build a new e-commerce platform. The enterprise tech architecture needs to consider aspects such as the selection of the platform software, desired plugins, external database systems, deployment strategies, and security measures. While AI can help implement detailed software functionality, it is still the human architects who possess the expertise to evaluate and select the most suitable architecture that aligns with the organization’s specific requirements and constraints. Better Talent Management With AI assisting with custom software development, the management of skills and talent within an enterprise can be significantly improved. As developers are relieved from the burden of mundane coding tasks, they can focus on working at a higher level. That enables them to better leverage their expertise to drive innovation and solve complex problems. Let’s consider an example of an enterprise team tasked with integrating a new e-commerce platform into an existing system. Traditionally, integrating a new e-commerce platform would involve writing custom code to handle various aspects such as product listing, shopping cart functionality, payment processing, and order management. This process would require developers to invest considerable time and effort in understanding the intricacies of the platform. They would have to learn specific APIs and would have to implement much of the necessary functionality from scratch. However, with the aid of AI in code generation, developers can automate a significant portion of this process. They can leverage AI-powered tools that provide pre-built code snippets tailored to the selected e-commerce platform. This allows developers to integrate the platform into the existing system much faster. Thus, the integration of AI in custom software development not only improves productivity and efficiency but also alleviates the pressure of talent management and hiring within enterprises. As AI automates the base-level coding tasks, the demand for volume diminishes. AI helps make skills more transferable across different projects and reduces the need for hiring a large number of developers solely focused on low-level coding tasks. With AI handling the foundational coding work, this shift allows organizations to prioritize hiring developers with expertise in areas like software architecture, system integration, data analysis, and user experience design. Additionally, the adoption of AI-powered tools and frameworks enables developers to explore new technologies more easily. They can adapt their existing skill sets to different projects and platforms, reducing

Rethinking Software Application Architecture with AI: Unlocking New Possibilities – Part 2

Welcome back! In our previous blog post, ” Rethinking Software Application Architecture with AI: Unlocking New Possibilities – Part 1”, we explored the transformative impact of AI on software application development. We discussed the need to rethink traditional architecture and design, focusing on leveraging AI-driven insights to improve user experience. If you missed that, be sure to check it out for a comprehensive understanding of the foundations of AI-enabled software application development. In this blog post, we will continue our exploration by delving into parts 3 and 4 of this series. To recap, the 4 parts are: Harnessing the Power of AI Models Transforming Data into Predictive Power Creating a Feedback Loop Evolution of Enterprise Architecture Guidelines and Governance We will dive into the vital aspects of feedback loop and data enrichment, as well as the evolution of enterprise architecture guidelines and governance practices. These elements play a crucial role in optimizing user experience, enhancing data-driven insights, and ensuring responsible AI practices. So, let’s continue our journey into the world of AI-enabled software application development! Creating a Feedback Loop In the age of AI, it’s essential to establish a feedback loop between the application and AI models. This feedback loop allows the application to continuously improve user experience and enrich the underlying data store on which the AI models operate. One way to implement the feedback loop is by capturing user interactions and behavior within the application. This data can be fed back into the AI models to refine their understanding of user preferences, patterns, and needs. For instance, an AI-powered customer portal can learn from user interactions to provide more accurate and contextually relevant experiences over time. By continuously analyzing and incorporating user interactions, the application can adapt its behavior and offer an improved experience. On the other hand, we can enhance the traditional data lakes by using the application data itself to enrich the underlying AI models. As users interact with the application, their data can be anonymized and aggregated to train and refine AI algorithms which can improve experiences across the enterprise. The feedback loop also enables the application to adapt to changing user needs and preferences. By monitoring user interactions and analyzing feedback, software developers can identify areas of improvement and prioritize feature enhancements. This iterative process ensures that the application remains relevant and aligns with user expectations over time. To facilitate the feedback loop, it is crucial to establish robust data governance practices. This involves ensuring data privacy, security, and compliance with regulatory standards. Users must have control over their data and be provided with transparent information about how their data is being utilized to enhance the application’s AI capabilities. By building trust and maintaining ethical data practices, software applications can foster a positive user experience and encourage user engagement. In the next section, we will explore how the evolution of enterprise architecture guidelines and governance is essential in the context of AI-enabled software application development. Evolution of Enterprise Architecture Guidelines and Governance AI-enabled software application development necessitates a rethinking of enterprise architecture guidelines and governance practices. Traditional approaches may not fully encompass the unique considerations and challenges posed by AI integration. To ensure successful implementation and maximize the benefits of AI, we must adapt the architecture frameworks and governance processes accordingly. Here are a few ways in which this can be accomplished: Modular Design and Microservices Architecture: AI integration often requires the utilization of specialized AI services or models. To enable seamless integration and scalability, organizations should adopt an approach that allows the AI insights to be available in real time to the applications that may need it. This is also the concept behind Ignitho’s Customer Data Platform architecture. Data Management and Infrastructure: AI relies heavily on data, and we must develop robust data management strategies to ensure data quality, security, and accessibility. This includes implementing data privacy and security measures and optimizing data infrastructure to handle the increased demands of AI processing and storage. Organizations should also consider the integration of data lakes, data warehouses, or other data management solutions that facilitate AI model training and data analysis. Ethical Considerations: With AI’s growing influence, ethical considerations become paramount. Organizations must establish guidelines that include ensuring fairness, transparency, and accountability in AI algorithms, identifying and mitigating bias in data sources, and respecting user privacy and consent. Ethical guidelines should be incorporated into the governance processes to uphold responsible AI practices. Performance and Scalability: AI integration can introduce new performance and scalability requirements. AI models often require significant computational resources and can place additional demands on the infrastructure. Scalability considerations should be incorporated into the architecture design to accommodate the potential growth of data volumes, user interactions, and AI workloads. Continuous Monitoring and Iterative Improvement: AI-enabled applications require continuous monitoring and iterative improvement to ensure their effectiveness and accuracy. The governance processes should review monitoring mechanisms to track this. Feedback loops, as discussed earlier, play a crucial role in capturing user interactions and improving AI models. Continuous improvement practices should be integrated into the governance processes to drive ongoing optimization and refinement. By evolving enterprise architecture guidelines and governance practices to embrace AI-enabled software application development, organizations can unlock the full potential of AI while mitigating risks and ensuring responsible use. Conclusion: Unleashing the Potential of AI-Enabled Applications AI-enabled software application development is a game-changer. By embracing AI, rethinking architecture, and evolving the governance processes, organizations can unlock significant potential. These key takeaways shape the path to success (we covered 1 and 2 in Part 1 of this blog): Embrace API-enabled applications to access AI models and insights. Harness AI-driven insights to provide predictive capabilities and empower users. Establish a feedback loop to continuously improve user experience and enrich data stores. Adapt architecture and governance to support modular design, robust data management, ethics, and scalability. AI-enabled software applications present immense possibilities. Organizations can create intelligent, user-centric applications that drive informed decision-making and operational efficiency. Contact us if you want to know more about our product engineering services.

Rethinking Software Application Architecture with AI: Unlocking New Possibilities – Part 1

In today’s rapidly evolving technological landscape, the integration of artificial intelligence (AI) is reshaping the way we develop and design software applications. Traditional approaches to software architecture and design are no longer sufficient to meet the growing demands of users and businesses. To harness the true potential of AI, we need to reimagine the very foundations of software application development. With our AI led digital engineering approach, that’s exactly how we are approaching software application development and engineering. In this blog post, we will explore how AI-enabled software application development opens up new horizons and necessitates a fresh perspective on architecture and design. We will delve into key considerations and highlight the transformative power of incorporating AI into software applications. Note: In this blog we are not talking about using AI to develop applications. That will be the topic of a separate blog post. This blog has 4 parts. Harnessing the Power of AI Models Transforming Data into Predictive Power Creating a Feedback Loop Evolution of Enterprise Architecture Guidelines and Governance We’ll cover parts 1 and 2 in this blog. Parts 3 and 4 will be covered next week. 1. Harnessing the Power of AI Models with APIs In the era of AI, software applications can tap into a vast array of pre-existing AI models to retrieve valuable insights and provide enhanced user experiences. This is made possible through APIs that allow seamless communication with AI models. Thus, a key tenet of software engineering going forward is the inclusion of this new approach of leveraging AI to enhance user experience. By embracing this, we can revolutionize how our software interacts with users and leverages AI capabilities. Whether it’s natural language processing, computer vision, recommendation systems, or predictive analytics, APIs provide a gateway to a multitude of AI capabilities. This integration allows applications to tap into the collective intelligence amassed by AI models, enhancing their ability to understand and engage with users. The benefits of API-enabled applications that can leverage AI are manifold. By integrating AI capabilities, applications can personalize user experiences, delivering tailored insights and recommendations. Consider an e-commerce application that leverages AI to understand customer preferences. By calling an API that analyzes historical data and user behavior patterns, the application can offer personalized product recommendations, thereby increasing customer satisfaction and driving sales. Applications also have the potential to dynamically adapt their behavior based on real-time AI insights. For example, a customer support application can utilize sentiment analysis APIs to gauge customer satisfaction levels and adjust its responses accordingly. By understanding the user’s sentiment, the application can respond with empathy, providing a more personalized and satisfactory customer experience. It follows that the data and AI strategy of the enterprise must evolve in tandem to enable this upgrade in how we define and deliver on the scope for software applications. In the next section, we will delve deeper into the concept of AI-driven insights and how they can transform the way we present data to users. 2. AI-Driven Insights: Transforming Data into Predictive Power With enterprises investing significantly in AI, it is no longer enough to present users with raw data. The true power of AI lies in its ability to derive valuable insights from data and provide predictive capabilities that go beyond basic numbers. By incorporating AI-driven insights into software applications, we can empower users with predictive power and enable them to make informed decisions. Traditionally, software applications have displayed historical data or real-time information to users. For instance, an analytics dashboard might show the number of defects in the past 7 days. However, with AI-driven insights, we can take it a step further. Instead of merely presenting past data, we can leverage AI models to provide predictions and forecasts based on historical patterns. This predictive capability allows users to anticipate potential issues, plan ahead, and take proactive measures to mitigate risks. AI-driven insights also enable software applications to provide context and actionable recommendations based on the data presented. For example, an inventory management application can utilize AI models to analyze current stock levels, market trends, and customer demand. By incorporating this analysis into the application, users can receive intelligent suggestions on optimal stock replenishment, pricing strategies, or product recommendations to maximize profitability. Furthermore, AI-driven insights can be instrumental in optimizing resource allocation and operational efficiency. For instance, in a logistics application, AI algorithms can analyze traffic patterns, weather conditions, and historical data to provide accurate delivery time estimations. By equipping users with this information, they can plan their operations more effectively, minimize delays, and enhance overall customer satisfaction. Next steps In this blog, we introduced the concept of AI-enabled software application development and emphasized the need to rethink traditional architecture and design. It is important to leverage AI models to modify behavior and engage users effectively. Additionally, applications must go beyond raw data to provide predictive capabilities. These insights empower users and enable informed decision-making. Moving forward, in the next blog post, we will delve into parts 3 and 4, which will focus on the feedback loop between applications and AI models for enhancing user experience and enriching the data store, as well as the evolution of enterprise architecture guidelines and governance in the context of AI-enabled software application development. Stay tuned for the next blog post to learn more about these crucial topics.

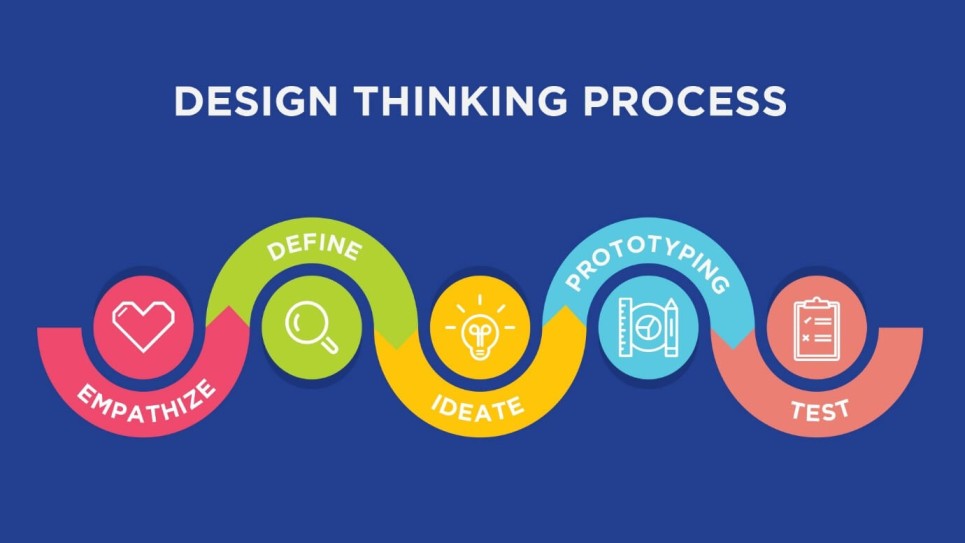

How to choose the right Agile methodology for project development in a frugal way

Earlier, the role of technology was limited to be a mere business enabler. But the evolving business scenario now visions technology as the major business transformation element. This puts enterprises under tremendous pressure to build disruptive products with capabilities to transform the entire business world. The role of an efficient project management model for software development is crucial here to bring the right technology solutions at the right time. Traditional project development models such as Waterfall are too rigid and depend explicitly on documentation. In such models, the customers get a glimpse of their long-wished product/application only towards the end of the delivery cycle. Through continuous communication and improvements in project development cycle irrespective of the diverse working conditions, enterprises need to ensure that they get things done with the application development within the stipulated time without compromising on quality. Without an efficient delivery method, the software development team often comes under pressure to release the product on time. According to the survey done by Tech Beacon, almost 51% of the companies worldwide are leaning towards agile while a 16% has already adopted pure agile practices. While this statistic is in favour of agile for application development, there are some major challenges faced by both the service providers and customers while practising agile. Communication gap When the team is geographically distributed, communication gap is a common problem. In such a distributed agile development model, the transition of information from one team to another can create confusion and lose the essence of the context. An outcome-based solutions model with team members present at client locations can enable direct client interactions and prompt response between both the sides. Time zone challenges Another challenge that the client faces in a distributed agile development environment is the diverse time zones. With teams working in different time zones, it is often difficult to find out common work hours where every team is present at the same time. Through an outcome-based solutions model, the customers can stay relaxed and get prompt assistance during emergencies. Moreover, in such cases, the client stays updated about the progress of projects and iterations become easy. Cultural differences In a distributed agile team, the difference in work ethics, commitment, holidays and culture creates a gap between the development team and the customer. In situations like these, a panel of experts including ex-CIO’s and industry experts can be the helping aid to provide customers with valuable insights on current market trends and solutions to close any cultural gaps. Scope Creep Scope Creep is another issue faced by countless customers associated with agile teams working on software development projects out of multiple locations. Here, the missing pillar is a scrum master at offshore defining and estimating the tasks along with onsite representatives communicating every requirement from clients. A closer look at these challenges suggests the scope of a properly architected and innovative agile model to resolve these issues. Through a carefully devised agile strategy, it becomes quite easy for both the client and development sides to interact on a frequent basis and overcome the obstacles.